Advertisement

If you have a new account but are having problems posting or verifying your account, please email us on hello@boards.ie for help. Thanks :)

Hello all! Please ensure that you are posting a new thread or question in the appropriate forum. The Feedback forum is overwhelmed with questions that are having to be moved elsewhere. If you need help to verify your account contact hello@boards.ie

Hi all! We have been experiencing an issue on site where threads have been missing the latest postings. The platform host Vanilla are working on this issue. A workaround that has been used by some is to navigate back from 1 to 10+ pages to re-sync the thread and this will then show the latest posts. Thanks, Mike.

Hi there,

There is an issue with role permissions that is being worked on at the moment.

If you are having trouble with access or permissions on regional forums please post here to get access: https://www.boards.ie/discussion/2058365403/you-do-not-have-permission-for-that#latest

There is an issue with role permissions that is being worked on at the moment.

If you are having trouble with access or permissions on regional forums please post here to get access: https://www.boards.ie/discussion/2058365403/you-do-not-have-permission-for-that#latest

Apple vs. The FBI - Court Orders Creation of iOS Backdoor

-

18-02-2016 3:08am#1http://www.apple.com/customer-letter/Tim Cook, CEO wrote:February 16, 2016

A Message to Our Customers

The United States government has demanded that Apple take an unprecedented step which threatens the security of our customers. We oppose this order, which has implications far beyond the legal case at hand.

This moment calls for public discussion, and we want our customers and people around the country to understand what is at stake.

The Need for Encryption

Smartphones, led by iPhone, have become an essential part of our lives. People use them to store an incredible amount of personal information, from our private conversations to our photos, our music, our notes, our calendars and contacts, our financial information and health data, even where we have been and where we are going.

All that information needs to be protected from hackers and criminals who want to access it, steal it, and use it without our knowledge or permission. Customers expect Apple and other technology companies to do everything in our power to protect their personal information, and at Apple we are deeply committed to safeguarding their data.

Compromising the security of our personal information can ultimately put our personal safety at risk. That is why encryption has become so important to all of us.

For many years, we have used encryption to protect our customers’ personal data because we believe it’s the only way to keep their information safe. We have even put that data out of our own reach, because we believe the contents of your iPhone are none of our business.

The San Bernardino Case

We were shocked and outraged by the deadly act of terrorism in San Bernardino last December. We mourn the loss of life and want justice for all those whose lives were affected. The FBI asked us for help in the days following the attack, and we have worked hard to support the government’s efforts to solve this horrible crime. We have no sympathy for terrorists.

When the FBI has requested data that’s in our possession, we have provided it. Apple complies with valid subpoenas and search warrants, as we have in the San Bernardino case. We have also made Apple engineers available to advise the FBI, and we’ve offered our best ideas on a number of investigative options at their disposal.

We have great respect for the professionals at the FBI, and we believe their intentions are good. Up to this point, we have done everything that is both within our power and within the law to help them. But now the U.S. government has asked us for something we simply do not have, and something we consider too dangerous to create. They have asked us to build a backdoor to the iPhone.

Specifically, the FBI wants us to make a new version of the iPhone operating system, circumventing several important security features, and install it on an iPhone recovered during the investigation. In the wrong hands, this software — which does not exist today — would have the potential to unlock any iPhone in someone’s physical possession.

The FBI may use different words to describe this tool, but make no mistake: Building a version of iOS that bypasses security in this way would undeniably create a backdoor. And while the government may argue that its use would be limited to this case, there is no way to guarantee such control.

The Threat to Data Security

Some would argue that building a backdoor for just one iPhone is a simple, clean-cut solution. But it ignores both the basics of digital security and the significance of what the government is demanding in this case.

In today’s digital world, the “key” to an encrypted system is a piece of information that unlocks the data, and it is only as secure as the protections around it. Once the information is known, or a way to bypass the code is revealed, the encryption can be defeated by anyone with that knowledge.

The government suggests this tool could only be used once, on one phone. But that’s simply not true. Once created, the technique could be used over and over again, on any number of devices. In the physical world, it would be the equivalent of a master key, capable of opening hundreds of millions of locks — from restaurants and banks to stores and homes. No reasonable person would find that acceptable.

The government is asking Apple to hack our own users and undermine decades of security advancements that protect our customers — including tens of millions of American citizens — from sophisticated hackers and cybercriminals. The same engineers who built strong encryption into the iPhone to protect our users would, ironically, be ordered to weaken those protections and make our users less safe.

We can find no precedent for an American company being forced to expose its customers to a greater risk of attack. For years, cryptologists and national security experts have been warning against weakening encryption. Doing so would hurt only the well-meaning and law-abiding citizens who rely on companies like Apple to protect their data. Criminals and bad actors will still encrypt, using tools that are readily available to them.

A Dangerous Precedent

Rather than asking for legislative action through Congress, the FBI is proposing an unprecedented use of the All Writs Act of 1789 to justify an expansion of its authority.

The government would have us remove security features and add new capabilities to the operating system, allowing a passcode to be input electronically. This would make it easier to unlock an iPhone by “brute force,” trying thousands or millions of combinations with the speed of a modern computer.

The implications of the government’s demands are chilling. If the government can use the All Writs Act to make it easier to unlock your iPhone, it would have the power to reach into anyone’s device to capture their data. The government could extend this breach of privacy and demand that Apple build surveillance software to intercept your messages, access your health records or financial data, track your location, or even access your phone’s microphone or camera without your knowledge.

Opposing this order is not something we take lightly. We feel we must speak up in the face of what we see as an overreach by the U.S. government.

We are challenging the FBI’s demands with the deepest respect for American democracy and a love of our country. We believe it would be in the best interest of everyone to step back and consider the implications.

While we believe the FBI’s intentions are good, it would be wrong for the government to force us to build a backdoor into our products. And ultimately, we fear that this demand would undermine the very freedoms and liberty our government is meant to protect.

Tim Cook

The All Writs Act - signed into law by President George Washington, simply reads

Interpreting the All Writs Act, federal courts are ordering a private business to create a new version of iOS that will allow a user passcode to be entered electronically, enabling it to be opened via bruteforce method.https://en.wikipedia.org/wiki/All_Writs_Act

(a) The Supreme Court and all courts established by Act of Congress may issue all writs necessary or appropriate in aid of their respective jurisdictions and agreeable to the usages and principles of law.

(b) An alternative writ or rule nisi may be issued by a justice or judge of a court which has jurisdiction.

The FBI feels that cracking into the shooter's phones is paramount to national security. They could be right, to an extent, as the phones may reveal accomplices that are unknown at this time, or other machinations in the works. It's entirely impossible to say. That argument is quantum.

In an ABC News Poll, the majority of respondents feel that Apple should comply with the order and produce a backdoor to iOS. The FBI would attempt to argue that it will only be used in this one circumstance, however I think we all know how that will really work. Tim Cook explains it succinctly.

Some presidential candidates have come out swinging at Apple, saying that they should be forced to comply with the order - very big government thing to do, mind. The media hasn't been to helpful to them, either. However, they finished 1.5% up today on the DOW. Some pundits have non-ironically called it "a victory for ISIS," that we don't surrender more invasive power to the government and sacrifice more of our values.

What an inefficient way to fight terror, anyway: we spend billions of dollars a year combing the internet, phone calls, text messages, email, a terrorist organization will always find a low-cost method you haven't thought of to conspire and communicate, necessitating the expenditure of ever-increasing piles of money to shut that avenue off. Horribly inefficient. One pair of shoes and a pair of underwear has cost the TSA billions of dollars, countless hours of lost human productivity. Highly cost effective pair of attacks that cost a few hundred bucks to carry out, too.

But I understand this is an issue where people will talk to cross-purposes: the defense of privacy and the defense against terrorism. But I don't think Apple should comply with the order, since 2001 we've had one privacy after another stripped away, the government already has a vast array of intelligence apparatuses, and without skipping a beat the intelligence industry will always tell you it needs one more in order to keep us safe.

Surprisingly, Marco Rubio just agreed with me on CNN, pointing out that countries around the world - not subject to US laws - will always be developing encryption now and in the future that will be exploited for nefarious purposes; if Apple gives a back door to iOS, ISIS will stop using it, they will move on to the next tech, and the federal government will spend 10 years and tens of billions of dollars monitoring people's innocuous iMessages to their spouses on the nil chance that they can prevent a future terrorist plot on the service.7

Comments

-

I haven’t really researched the situation yet, but one thing I have to wonder is... the cellphone didn’t belong to the terrorist. It belonged to the government agency for which he worked for. Why won’t Apple get the information needed for the owner (the government) of the cellphone?0

-

I haven’t really researched the situation yet, but one thing I have to wonder is... the cellphone didn’t belong to the terrorist. It belonged to the government agency for which he worked for. Why won’t Apple get the information needed for the owner (the government) of the cellphone?

Because they'd have to compromise the security of iOS to do so, and they quite rightly don't want to.

Insert the Benjamin Franklin misquote about liberty versus security of your choice here.0 -

-

As a normal person looking at this, this is about them ****s in the states that shot a load of people. The cops are trying to trace who they were in contact with.

I dont think this is unreasonable. If the bastards had of attacked loads of people at a show in the 3 Arena, would people still be concerned about the attackers privacy?

I realise there needs to be safeguards, but hey, this is ridiculous. I think apple should be ashamed of themselves, not for refusing - they have a point, but for not finding a reasonable solution.0 -

ScouseMouse wrote: »I dont think this is unreasonable. If the bastards had of attacked loads of people at a show in the 3 Arena, would people still be concerned about the attackers privacy?

We're not talking about the attackers' privacy. We're talking about the privacy of all iPhone users.

If we could reduce the risk of terrorism by banning sealed envelopes - so your credit card bills, medical reports, the works, were all sent on postcards - would that be a price you'd be willing to pay?0 -

Advertisement

-

I think you are spot on with what you are saying, but under the circumstances, one policy does not fit all. They should be able to sit around a table and come to an understanding, that in some cases, they do need to be able to assist.0

-

There is no "in some cases". If you weaken the security of an operating system, the result is an insecure operating system. Not an insecure operating system in some cases; an insecure operating system.ScouseMouse wrote: »I think you are spot on with what you are saying, but under the circumstances, one policy does not fit all. They should be able to sit around a table and come to an understanding, that in some cases, they do need to be able to assist.

If Apple cave and break iOS so that the government can access encrypted data, you think ISIS will use Apple devices? You think any criminals will use Apple devices? You think ordinary law-abiding citizens might just be put off using Apple devices, knowing that the government has a back door that it would never dream of using except in extreme circumstances?

We don't let the government install cameras in our houses to keep an eye on us just in case it might help them catch terrorists. For the same reasons, we shouldn't let the government bypass encryption just in case it might help them catch terrorists.0 -

I can somewhat understand Apple’s overall position, but the question then becomes is it good for business that they now forcibly make the Federal government design hacking software using some of the best hackers in the country ‘cyber-ops,’ (some avoiding jail time by aiding the government) to get through Apple’s security.

0

0 -

oscarBravo wrote: »We don't let the government install cameras in our houses to keep an eye on us just in case it might help them catch terrorists. For the same reasons, we shouldn't let the government bypass encryption just in case it might help them catch terrorists.

Indeed

On the other hand we entrust our most personal sensitive data with profit-making private companies like banks, ISP's, social media companies, corporations and so on

We give up huge amounts of privacy to get on a plane just because there is a very tiny remote chance of terrorism

We appear to have few problems with cameras filming our almost every move outside the house

And if there's a large attack like 911, then its shown us there can be a very sudden turnaround in public opinion on the issue

If, in a hypothetical situation, the issue were put to a public referendum, the results before, and the results after e.g. the Paris attack - would likely be very different

I don't believe an issue like this is so black and white0 -

The argument of "security of all people" seems a bit of overreach, frankly.

How much less secure is your 'phone in your pocket if this 'backdoor OS' is sitting in a vault in Apple's HQ in Cupertino, and can only be installed onto this 'phone if the FBI physically shows up at Apple's door with it in hand?

In practice, there is no way for Joe Bloggs to know if there is a backdoor in his 'phone or not. We use the 'phone anyway, we are (now) being told by Apple that no such backdoor exists. I would think that most people would work on the basis that their 'phones are reasonably secure. I don't think most people would work on the basis that their 'phones are proof against every hacker and intelligence agency in the world. Indeed, I don't think even Apple would make the claim that their 'phone is proof against every hacker and intelligence agency in the world. Is anyone really prepared to stand up and say that the Chinese Intelligence Agency is incapable of, or has not already, created a backdoor-enabled IOS and a system to install it on a 'phone in their possession? How much of the FBI's order is because they can't do it, vs it's easier to order Apple to do it given time and resources?

What I think is the more interesting legal argument is that of forcing a private entity to do work for the government on demand. Can a court order a safe-cracker to open a safe if he doesn't want to do it? I'm not sure it can. That said, the long-term answer (and one already proposed by Senator Feinstein) is legislative regulation: "All future 'phones sold in the US must have the ability for law enforcement, under due warrant process, to access the data. If this ability does not exist, your 'phone may not be sold in the US." It may not help the current San Bernadino case but will apply in the future. My guess is that this is going to backfire: Apple can either comply with the demand on this one 'phone now, or face such regulation in future for all 'phones sold to all people.0 -

Advertisement

-

Manic Moran wrote: »...the long-term answer (and one already proposed by Senator Feinstein) is legislative regulation: "All future 'phones sold in the US must have the ability for law enforcement, under due warrant process, to access the data. If this ability does not exist, your 'phone may not be sold in the US."

That's a great idea, if you believe that the ability for law enforcement to access your data under due process can't possibly lead to other parties accessing your data without due process.

Could a burglar access my house as it currently stands? Almost certainly. Is that a good reason to consciously engineer a known weakness into my home security, just in case the police ever need to get in?0 -

I have read most of that article,but not all.i know apple think they invented the world,but i was wondering if the biggest os in the world,android,is going to be subject to the same request from the F.B.I.?0

-

0

0 -

oscarBravo wrote: »That's a great idea, if you believe that the ability for law enforcement to access your data under due process can't possibly lead to other parties accessing your data without due process.

Could a burglar access my house as it currently stands? Almost certainly. Is that a good reason to consciously engineer a known weakness into my home security, just in case the police ever need to get in?

Hardly an identical case, though, is it? It's not as if the police have the physical, exclusive, control of your home.

The FBI isn't asking for Apple to give them the backdoor-enabled OS. They're asking Apple to put the OS onto the phone that they have in their vault. Apple putting this OS onto that 'phone has no bearing on any other 'phones in the world, unless Apple chooses to put that OS onto the other 'phones in the world, which the FBI hasn't asked to do.

But the legislature now might force them to do, since Apple won't go the individual case route.0 -

Manic Moran wrote: »The FBI isn't asking for Apple to give them the backdoor-enabled OS. They're asking Apple to put the OS onto the phone that they have in their vault.

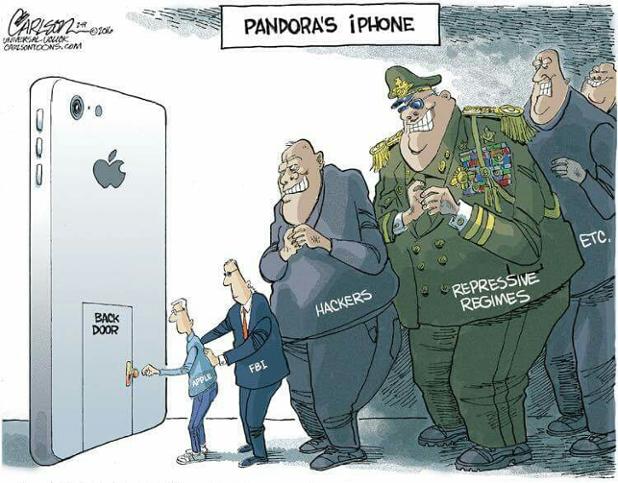

I think this cartoon sums up my feelings on the subject: 0

0 -

oscarBravo wrote: »I think this cartoon sums up my feelings on the subject:

That may be so, but how do you substantiate that feeling into fact?

As it is, there is no backdoor because of Apple's decisions. (They have not chosen to create one).

How are hackers going to get at this backdoor unless Apple makes a decision to allow it? Is there anything in this court order which requires that Apple relinquish positive control of the software? Apple suddenly decides to release it to all 'phones? Unlikely. Unless the hackers hack Apple's servers to get the code, in which case the security of the 'phone is not high on Apple's list of issues. The 'phones at large will be no more or less vulnerable than they were two months ago.0 -

Actually, I found the order itself.

https://regmedia.co.uk/2016/02/17/apple_order.pdf

The judge suggests that apple code the software in such a way that it will only work on the one specific telephone. They can encrypt that code however they like.0 -

Which do you think is less likely: that hackers access a backdoor that doesn't exist, or that hackers access a backdoor that does?Manic Moran wrote: »That may be so, but how do you substantiate that feeling into fact?

As it is, there is no backdoor because of Apple's decisions. (They have not chosen to create one).

How are hackers going to get at this backdoor unless Apple makes a decision to allow it?

The wider issue is the precedent that's set by the government ordering a company to compromise the security of its product.

It appears that the reason this backdoor is required is because a government investigator screwed up the process of recovering the required information. If there's a precedent to be set here, I'd rather it was "don't screw up the investigation" than "compromise the security of your product so that we can screw up at will".0 -

oscarBravo wrote: »Which do you think is less likely: that hackers access a backdoor that doesn't exist, or that hackers access a backdoor that does?

Why would it exist?

Let's assume you have an iphone in your pocket right now. What is the chain of events which would lead from some Apple programmer plugging the subject 'phone into his laptop to load the backdoor OS onto it, to an exploitable backdoor appearing on your 'phone in your pocket, say, oh, two years from now? (Let's assume that the Apple programmer for some reason does not code this OS for the shooter's 'phone and does not encrypt that).The wider issue is the precedent that's set by the government ordering a company to compromise the security of its product.

Again, how does your 'phone, in your pocket, become any less secure?It appears that the reason this backdoor is required is because a government investigator screwed up the process of recovering the required information. If there's a precedent to be set here, I'd rather it was "don't screw up the investigation" than "compromise the security of your product so that we can screw up at will".

So, if I understand this correctly, your objection isn't that the government can read all your data. After all, if the password hadn't been reset, then they could have forced the auto-upload, and the data read from the cloud with Apple's help. (They've done it in the past). Basically, you're fine with apple hacking your account for the government as long as due process (eg warrant) is followed.

It's also worth pointing out that not changing the password may not have made any difference anyway. Given the auto-uploads suddenly stopped, it would appear that Farook disabled the auto-upload feature anyway, so the point is likely a red herring. The FBI would want access to the physical 'phone itself to get the latest data. And what if it was a 'phone with had no auto-upload-to-a-cloud feature at all? Where are the privacy principles there? (or in the case of data which is not uploaded to the cloud, as I don't believe everything actually is)

Your point of concern is apparently that 'once the OS is made, then I might get hacked.' Which brings me back to the first question of this post. How does one get from here to there which does not involve either gross incompetence by Apple, criminal collusion by an employee (which could happen without the court order), or a hack of such magnitude that Apple itself can't stop it? And which becomes irreversible?0 -

Manic Moran wrote: »Let's assume you have an iphone in your pocket right now. What is the chain of events which would lead from some Apple programmer plugging the subject 'phone into his laptop to load the backdoor OS onto it, to an exploitable backdoor appearing on your 'phone in your pocket, say, oh, two years from now?

I don't know. Are you arguing that, because neither of us can imagine a specific chain of events that could allow something to happen, that it couldn't possibly happen?

In Tim Cook's words:

Sure, the FBI can claim that they only want this phone unlocked. Do you seriously think that if Apple write a crippled version of iOS that can unlock one phone, that they'll never be strong-armed into doing the same thing again and again and again until it's routine?Law enforcement agents around the country have already said they have hundreds of iPhones they want Apple to unlock if the FBI wins this case.

He goes on to say:In the physical world, it would be the equivalent of a master key, capable of opening hundreds of millions of locks. Of course, Apple would do our best to protect that key, but in a world where all of our data is under constant threat, it would be relentlessly attacked by hackers and cybercriminals. As recent attacks on the IRS systems and countless other data breaches have shown, no one is immune to cyberattacks.

Again, we strongly believe the only way to guarantee that such a powerful tool isn’t abused and doesn’t fall into the wrong hands is to never create it.0 -

Advertisement

-

It's not absurd, or unprecedented, to think that once the FBI wins this ruling, they will have set the legal precedent to do it again. And again.

From there its easy for them to say "you know, this shouldn't be so bureaucratic each and every time we need to search an iPhone! It costs the taxpayers money after all" and from there, the FBI wins a streamlined process by which they can gain access to phones at an automated whim - a backdoor.0 -

It's not absurd, or unprecedented, to think that once the FBI wins this ruling, they will have set the legal precedent to do it again. And again.

From there its easy for them to say "you know, this shouldn't be so bureaucratic each and every time we need to search an iPhone! It costs the taxpayers money after all" and from there, the FBI wins a streamlined process by which they can gain access to phones at an automated whim - a backdoor.

That didn't take long.

http://gizmodo.com/justice-department-forcing-apple-to-unlock-about-12-oth-1760749507?utm_campaign=socialflow_gizmodo_facebook&utm_source=gizmodo_facebook&utm_medium=socialflow

In fact it would appear that the San Bernandino case is simply being highlighted because of terrorists. Perhaps the FBI assumes such a high profile case is their golden ticket to getting the precedent they need to open the other 12 phones. How many will it be by the end of the year? Or next year? Do you see how this is not an isolated thing?0 -

oscarBravo wrote: »I don't know. Are you arguing that, because neither of us can imagine a specific chain of events that could allow something to happen, that it couldn't possibly happen?

Not for that line that you pose. For that, I'm arguing that if it does possibly happen (however unlikely that may be), it's no worse than if the backdoor hadn't been invented in the first place.

I think it's probably fair to say that Apple's corporate security is as strong as they can make it. That their iOS software, data, codes, upload programs, patch programs, and everything else 'proprietary' is behind every piece of encryption that they can come up with, probably better than, and certainly no worse than, the encryption on their 'phones. If someone can break that and get the vaulted, firewalled OS, and then break the encryption on that OS, I suspect breaking the 'phone without having to break into Apple's servers in the first place is not going to be particularly beyond their capabilities. And they would still have to get the software onto your phone for it to be effective.In fact it would appear that the San Bernandino case is simply being highlighted because of terrorists. Perhaps the FBI assumes such a high profile case is their golden ticket to getting the precedent they need to open the other 12 phones. How many will it be by the end of the year? Or next year? Do you see how this is not an isolated thing?

Whoever said it was an isolated incident? What is the legal or moral principle involved here? Your data is your data. It is subject to the same levels of privacy and protection as every other bit of your data. The legal protection of a note scribbled on a piece of paper in your house is subject to the same 4th Amendment due process requirement as the idea of seizing your unpassword-protected computer, getting a tap on your iPhone line, getting records of your text messages sent and received from the 'phone company, getting Apple to crack into your iCloud account or, yes, breaking into your encrypted iPhone. The only legal difference is that in the case of a password, you might not be required to provide that password (5th Amendment, legal precedent in the US is undecided) and you leave the authorities with a technical, not moral, issue to solve (In the UK, you must reveal the password). Nowhere is there some magical additional level of legal or moral protection from due process because you put a password on a device.0 -

Manic Moran wrote: »Whoever said it was an isolated incident? What is the legal or moral principle involved here? Your data is your data. It is subject to the same levels of privacy and protection as every other bit of your data. The legal protection of a note scribbled on a piece of paper in your house is subject to the same 4th Amendment due process requirement as the idea of seizing your unpassword-protected computer, getting a tap on your iPhone line, getting records of your text messages sent and received from the 'phone company, getting Apple to crack into your iCloud account or, yes, breaking into your encrypted iPhone. The only legal difference is that in the case of a password, you might not be required to provide that password (5th Amendment, legal precedent in the US is undecided) and you leave the authorities with a technical, not moral, issue to solve (In the UK, you must reveal the password). Nowhere is there some magical additional level of legal or moral protection from due process because you put a password on a device.

The FBI has tried to argue that this is a "narrow focus" case, https://www.fbi.gov/news/pressrel/press-releases/fbi-director-comments-on-san-bernardino-matter and spokespeople for the FBI have been quoted by several outlets arguing this is a single device case. Notice how the FBI director mentions nothing of other devices in his letter. Political, wouldnt you agree.

The difference with a house is you lock your house. In the event of a warrant, the FBI breaks through the lock, in the same mannerisms a criminal might be able to break the locks.

Whats different here is Apple has created a device which cannot be easily broken by anyone, including authorities and criminals. The authorities have the warrant, but they cannot break in, so Apple is forced to weaken the design of the system.

So given that this volume of requests will only increase with time, that leaves us with a lot of options: 1 is that the DOJ will force Apple to expand its workforce and create a division within Apple solely tasked with receiving warranted phones and performing forensics on them, solely at the expense of the taxpayer. The other is that the government will certainly at some point demand the creation of a backdoor, a means by which they don't have to send every suspect phone around the country to Cuppertino to be anazlyzed in what will amount to a time consuming process that could take weeks by the time each individual case is settled in the courts, shipped, and analyzed, all at the expense of the taxpayer. And if your political cludgeon is terrorism, weeks are an eternity. Certainly, the intelligence industry is not unfamiliar with getting past the courts: https://en.wikipedia.org/wiki/PRISM_(surveillance_program)0 -

The FBI has tried to argue that this is a "narrow focus" case, https://www.fbi.gov/news/pressrel/press-releases/fbi-director-comments-on-san-bernardino-matter and spokespeople for the FBI have been quoted by several outlets arguing this is a single device case. Notice how the FBI director mentions nothing of other devices in his letter. Political, wouldnt you agree.

Probably. Press releases tend to be done for political reasons. Legal arguments usually are not put forward in press releases, that's more a matter for the courts. And it is a single device case. This order applies to one device only. If they want to get it onto another device, they go to court again and get another order.The difference with a house is you lock your house. In the event of a warrant, the FBI breaks through the lock, in the same mannerisms a criminal might be able to break the locks.

Hackers are criminals, right? So if it's possible for a hacker to break a 'phone (as Oscar Bravo and myself both agree, it's difficult, but one cannot assure that "any and all" situations cannot arrive), where's the legal and moral difference between the police doing to a door lock, and doing it to a 'phone lock? A lock is a lock is a lock.

What if the door lock is actually a 'phone lock? If the lock is an electronic keypad and swipe. A typical criminal may just break down the door, but if the police wanted to sneak in and sneak out, requiring cracking the lock? Fewer criminals can do it, is there a different legal or moral protection standard? Indeed, should law-abiding folks guide their principles by the standards of what criminals can do?

You have actually touched upon (and return to later) my own issue with this order process. As you say, the police breaks the lock. Or picks the lock. Or they contract a third party (eg locksmith) to pick the lock. However, in such third-party cases, the work is voluntarily done on a contract basis. I see no legal or moral issues with this. Where I am less comfortable, because I simply don't know the precedent, is in forcing that third party to do work (paid or not) even if it doesn't want to.Whats different here is Apple has created a device which cannot be easily broken by anyone, including authorities and criminals. The authorities have the warrant, but they cannot break in, so Apple is forced to weaken the design of the system.

On the one 'phone on which the warrant applies, yes.So given that this volume of requests will only increase with time, that leaves us with a lot of options: 1 is that the DOJ will force Apple to expand its workforce and create a division within Apple solely tasked with receiving warranted phones and performing forensics on them, solely at the expense of the taxpayer.

I'm fine with this. It is no different to law enforcement contracting with other external entities such as the aforementioned locksmiths. Law enforcement have a limited budget. If they feel a portion of it is best used contracting with Apple to perform services, that's an allocation decision no different to any other.The other is that the government will certainly at some point demand the creation of a backdoor, a means by which they don't have to send every suspect phone around the country to Cuppertino to be anazlyzed in what will amount to a time consuming process that could take weeks by the time each individual case is settled in the courts, shipped, and analyzed, all at the expense of the taxpayer. And if your political cludgeon is terrorism, weeks are an eternity. Certainly, the intelligence industry is not unfamiliar with getting past the courts

Agreed. And from the rumblings on Capitol Hill, should Apple fail to accede to the requirements of this order, it seems likely that that is exactly what they are going to try to do. Except that would be done by our elected officials, not by the executive departments. On the legal side, Apple's reputation/privacy argument (legally speaking the 'irreperable harm' concept) is much stronger when defending against a court order to transmit such an OS to all 'phones or ship all 'phones with one. But that argument gets completely nullified if Congress gets involved.0 -

In fact it would appear that the San Bernandino case is simply being highlighted because of terrorists. Perhaps the FBI assumes such a high profile case is their golden ticket to getting the precedent they need to open the other 12 phones. How many will it be by the end of the year? Or next year? Do you see how this is not an isolated thing?

I don't know if it's already been mentioned, but Apple has unlocked at least 70 iPhones for the Feds before.

http://www.thedailybeast.com/articles/2016/02/17/apple-unlocked-iphones-for-the-feds-70-times-before.html0 -

It's not absurd, or unprecedented, to think that once the FBI wins this ruling, they will have set the legal precedent to do it again. And again.

From there its easy for them to say "you know, this shouldn't be so bureaucratic each and every time we need to search an iPhone! It costs the taxpayers money after all" and from there, the FBI wins a streamlined process by which they can gain access to phones at an automated whim - a backdoor.

They want to access that particular phone to gain information about the attacker that left 14 people dead. The request is specific to that type of phone and that type of software.

This is their mandate, their job. I'm not sure what else they are supposed to do.. drop it? use old fashioned obsolete methods?

The undertones in this whole debate are along the lines of - this is some sort of backdoor in all Apple phones (it's not) the FBI may misuse these new abilities to appropriate people's private data and subsequently abuse that data0 -

I don't know if it's already been mentioned, but Apple has unlocked at least 70 iPhones for the Feds before.

http://www.thedailybeast.com/articles/2016/02/17/apple-unlocked-iphones-for-the-feds-70-times-before.html

Werent those with a previous version of iOS? I think the updated the encryption with a later version.0 -

The question has been made redundant for now. The FBI and DOJ have abandoned the lawsuits given that they cracked the 'phone in question. Looks like they'll see if they can get into the other phones they're looking at. Chances are the question will come up again, given Apple is doubtless going to look into changing its security system.

Normally the FBI would notify Apple of a detected vulnerability as it's in the national interest to have public security. It remains to be seen if they will do so on this occasion instead of holding on to the only "key" they can rely upon0 -

Advertisement

-

More likely now that a hack is known, Apple will look for methods of cracking its own devices to design better ones.0

-

More likely now that a hack is known, Apple will look for methods of cracking its own devices to design better ones.

Oh, undoubtedly, but there's no guarantee they'll internally discover the same hack which the FBI and its help used. (They should be trying to hack their own stuff anyway, one would have thought).

Either way, it's a bit of a blow to the perception of security.0 -

Manic Moran wrote: »The question has been made redundant for now. The FBI and DOJ have abandoned the lawsuits given that they cracked the 'phone in question. Looks like they'll see if they can get into the other phones they're looking at. Chances are the question will come up again, given Apple is doubtless going to look into changing its security system.

Normally the FBI would notify Apple of a detected vulnerability as it's in the national interest to have public security. It remains to be seen if they will do so on this occasion instead of holding on to the only "key" they can rely upon

Rather than set a precedent in law, its handy that this unhackable phone got hacked.0 -

Rather than set a precedent in law, its handy that this unhackable phone got hacked.

Both handy and unsurprising.

Here's hoping the very simple steps that law enforcement can take in future to recover data are followed instead of this attempt to compel a company to hurt itself.

Great blog here which details the steps missed that would have been sufficient instead of lawsuits - http://www.zdziarski.com/blog/?p=5834

The blog in general covers an awful lot of the case and is really well written imo. - http://www.zdziarski.com/blog/0 -

Deleted User wrote: »Both handy and unsurprising.

Here's hoping the very simple steps that law enforcement can take in future to recover data are followed instead of this attempt to compel a company to hurt itself.

Great blog here which details the steps missed that would have been sufficient instead of lawsuits - http://www.zdziarski.com/blog/?p=5834

The blog in general covers an awful lot of the case and is really well written imo. - http://www.zdziarski.com/blog/

None of which covers the basic point, which still remains unsettled. In the situation that the FBI does everything right and still can't get into the 'phone, should Apple, or can they be compelled to, crack their own product? I'm sure eventually a case will come up where the investigation doesn't torpedo itself, and Apple improves its software. The question has merely been deferred to a later point, the FBI has somewhat redeemed itself in the investigation reputation, and Apple has made a mark for itself for publicly standing up for privacy while at the same time, getting some egg on its face by making the public stand publicly moot when the privacy it was advocating proved not to be as thorough as many people believed.0

Advertisement